What if I asked you to write down the top three things you’re worried about today—and I don’t mean personal stuff, I mean like macro issues—what would they be? If you feel like it, write them down now, you can do it in the comments here, I’ll be curious to see what you say. But here’s my question: would AI make your top three? Is it weird that it’d probably be my number one?

I think most people would list something about the economy, which is very understandable. At the same time, a lot of experts are saying that AI is about to have a pretty severe impact on jobs and the economy. I also think a lot of people would say something about the backsliding of democracy, and in a second, I want to talk about how AI has a real lot to do with that.

But zooming out, I think no matter what issue you care about—whether it’s global health or immigration, war and peace or education, whether it’s progressive or conservative, whether it’s local or global—no matter what it is, any problem you’re trying to solve in the world today largely comes down to people being able to cooperate. Of course, to cooperate, we need to be able to communicate. And to communicate, we need some base level of consensus reality, where facts are facts, fiction is fiction, and there’s an integrity to the information we’re all getting. Well, ask yourself: do you feel like we have that nowadays?

Probably not, right? Trust is low all around right now, and it’s getting worse. And why is that? Well, one way to answer that question is to divide the world up into Us and Them, point our fingers at the other side, and say, “It’s all their fault!” And yes, there are dishonest people out there, communicating in bad faith and making it hard to solve problems. But there always have been. That’s not new. What’s new is the technology. If you want to understand what’s going on right now with the lack of trust in our information, with our inability to communicate, and with our failure to cooperate—I’d say look at our digital technology.

Social Media and “The Algorithm”

For the last decade or two, the dominant digital technology has been social media. And I’m not saying nothing good happens on social media. Great stuff happens on social media all the time. I spent years of my life using social media technology to make collaborative art with people all over the world; it’s one of my favorite things I’ve ever done. The problem with social media is not the technology itself. It’s how big businesses use the technology to make as much money as possible.

Social media is supposed to be a way for you to communicate with people and get information. But you know that’s not what today’s biggest platforms are really built to do. They’re built to hook you, take as much of your time and attention as possible, and make money along the way with ads.

So, say you come looking for information about something going on in your life, or something going on in the world. Is the platform going to serve you information that’s trust-worthy? Maybe. But again, that’s not what it’s built to do. So it’s going to run your user-profile through an attention-maximizing algorithm, comparing the data it has on you to the data it has on a whole bunch of other users, and it’s going to statistically calculate what piece of information is most likely to hook you. Could that piece of information end up being true? Sure. Or it could end up telling you the earth is flat. The truth doesn’t matter to the algorithm—just your attention, and the company’s ad revenue.

The similar businesses of AI and social media

People already know about this problem with social media. But social media is not the same thing as AI…or, is it? Well, it’s true the two technologies are different, but the businesses of AI and social media look like they’re heading in pretty similar directions.

First of all, a lot of the leading AI companies are social media businesses: Meta, X, Bytedance (which owns TikTok), Google (which owns YouTube and isn’t strictly a social media company but is an advertising business with a lot of the same incentives). There are the exceptions of Microsoft and Anthropic, who are doing most of their business in enterprise, meaning they sell their services to companies whose employees use them for work. But, the biggest player right now in the AI industry is OpenAI who makes ChatGPT. It’s a newer company, so they haven’t had to show a profit yet, which reminds me of YouTube and Facebook back in the good old days before they had to turn on ads. However, OpenAI is reportedly building a social network, and they just appointed a new CEO of Applications, who previously worked at Facebook for ten years as Head of the Facebook app. So it seems sorta clear where ChatGPT is headed.

These AI products are being built to do the same thing as today’s most popular social media products: hook you, keep you, and serve you ads. Anything else they happen to accomplish will be incidental. Some of it’ll be good for users and the wider world; some of it’ll be bad. But that won’t matter to the attention-maximizing algorithms or the businesses they serve. Not because the people running the businesses necessarily mean any harm. Just because big businesses can’t prioritize things like the integrity of our information or society’s ability to communicate or cooperate. They can only prioritize one thing—making money.

Whatever negative impact social media has had on the world, AI is going to do the same thing, but a lot worse with a lot more money, data, and compute power driving it. Instead of algorithmically serving up the next piece of user-generated content, it will algorithmically generate the next piece of content, and eventually keep you hooked with something that feels less like a feed of content and more like hanging out with your best friend or lover. But ultimately, it’ll still be running the same attention-maximizing algorithm, with the same business incentives behind it.

Pouring gas on all the fires

So what does this all mean for those of us who look around and see a world heading in the wrong direction? Well, like I said, I believe whatever issue you care about, our information and communication technology is going to make or break our ability to cooperate on those issues. But let’s just take one example that a lot of people all over the world are worried about right now: the backsliding of democracy.

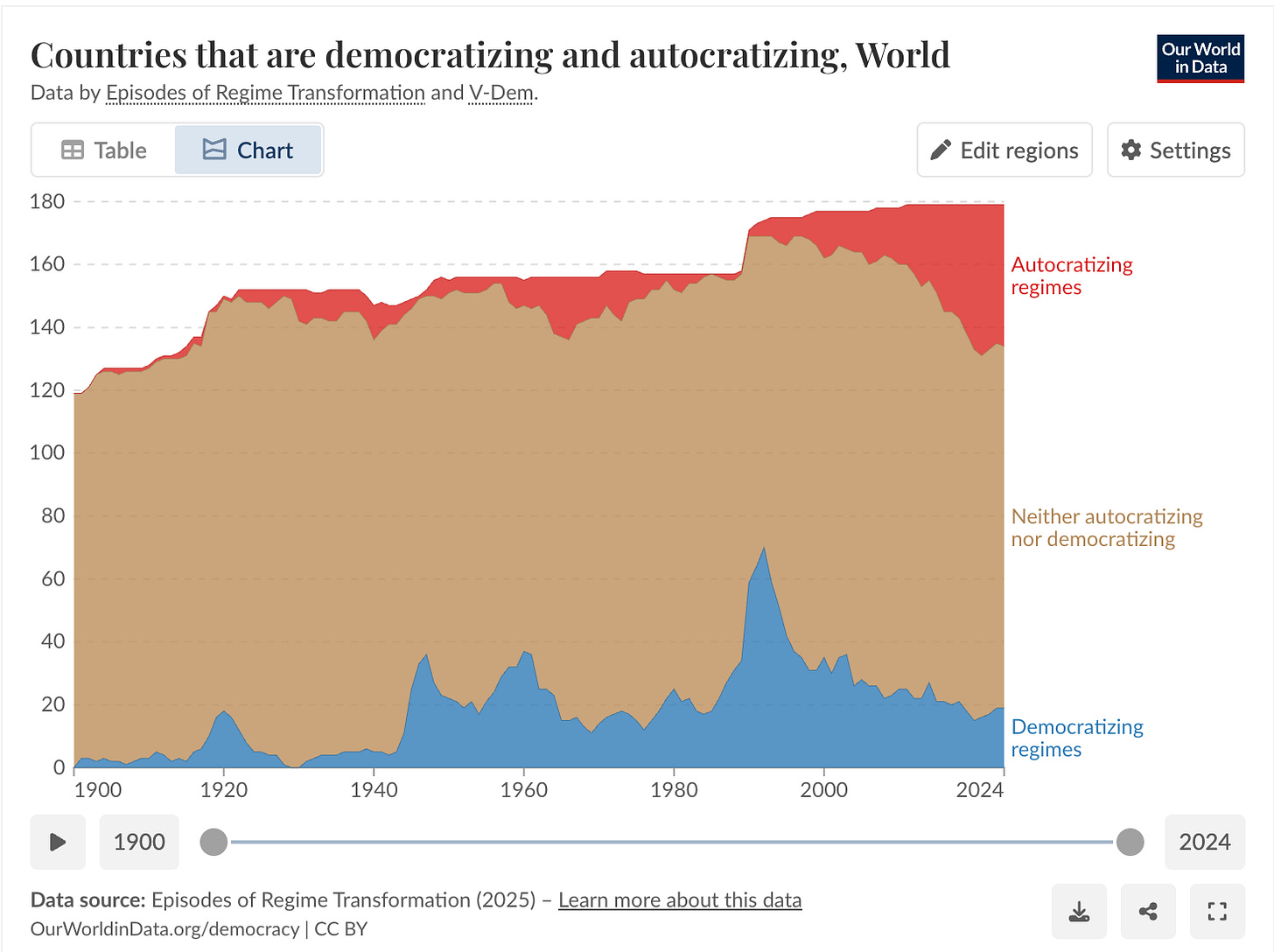

In 2008, I joined my first social media platform. It was Twitter. Okay, maybe I had a MySpace account before that. But anyway, I’d say it’s pretty defensible to mark 2008 as roughly around the start of modern social media’s rise in popularity. Now, look at this chart. Since around 2008, the world has seen a pretty sharp increase in autocratic regimes coming to power.

Can I prove that social media is directly causing a rise in autocracy? No. But I’m definitely not the only one noticing that attention-maximizing algorithms favor authoritarians and dictators. And it only stands to reason. The messages of authoritarians and dictators are usually simple, extreme, based in fear and anger, exactly the kind of thing that can quickly hook you and keep your attention. Whereas messages about real democracy that’ll actually inform people on complex policy issues and facilitate their legitimate participation in self-government? Definitely not the kind of thing that makes for quick, snackable, attention-grabbing content—whether it’s delivered by a social media feed or a chatbot.

It’s not that the algorithm is programmed to favor autocracy over democracy. It just favors whatever gets the most attention. And it so happens that conspiracy theories, extremism, fear-mongering, and other kinds of authoritarian rhetoric are really good at hooking people.

Here in the US, a lot of us are pretty worried about the backsliding of American democracy right now. But in my opinion, Donald Trump and his MAGA movement are not the causes of this problem. They’re symptoms of a deeper problem that we won’t begin to solve until we’ve made some big changes to our information and communication technology.

Building a bright future

Now, most enthusiasts will tell you that AI can help solve the biggest problems we’re facing today. And you might be surprised to hear—I agree with them. I also believe that advanced machine learning technology has the potential to contribute enormously to upgrading our democracy, improving education, reducing poverty, and ushering in a new era of peace and harmony. I could see it. But it won’t happen by default.

If the design and deployment of AI is guided purely by profit, we know where it’ll go. It’ll go the same way social media has gone. It’ll be one big race to the bottom—to see who can build the most effective attention-maximizing algorithm. And as we humans get more and more attached to our AI assistants and companions, we’ll lose more and more of our trust in each other. We’ll let these algorithms mediate more and more of our communication, and automate more and more of our cooperation. The rich will get richer, the poor will get poorer, the oceans will get warmer, and the wars will get bloodier.

This is why I’m talking about AI. Because I’m a dad. Because I’m worried about my kids’ future. And because I don’t think it has to be this way. I agree with Silicon Valley that we’re at a turning point in history right now, as this technology begins to spread through society. But while the giants of the tech industry would like us all to sit back and scroll our time away, distracted, as they build a future for themselves—we don’t have to take that path of least resistance. I really think this is the task of our generation. And I think we could still do a pretty good job, if we’re willing to pay attention, and try. 🔴